When Russell M. Pitzer, Ph.D. helped establish the Ohio Supercomputer Center (OSC) in 1987, supercomputers at the time were cooled with a liquid called Fluorinert. As system architecture changed over the years, centers moved to air cooling. But it seems fitting that OSC’s newest Dell EMC-built cluster, deployed just this year and named after Professor Emeritus Pitzer, has returned to this “retro” method of temperature regulation.

So, with technology always vaulting forward, why does it seem that we’re moving backward in this area?

First: Why supercomputers need to chill

Super or not, chips in any computer are powered by electrical impulses. Most of the power used by a supercomputer is due to internal resistance of the computer chips. Because this electricity is not expressed mechanically – actually moving something like a turbine, for instance, – it is expressed as heat.

“Ninety percent of the power use is going to the electrical components, and the dirty secret is that the (power) is ultimately dissipated to the room,” said Doug Johnson, chief systems architect and HPC systems group manager at OSC. “When you power a microprocessor or memory, there’s no mechanical work being done. So that power is all heat dissipation.”

Pack a bunch of chips together in giant racks and you’re suddenly talking about a lot of heat. Computer components can function at only so high a temperature before they begin to slow down, warp, and break. So we need to keep them cool.

Taking a dip in Fluorinert

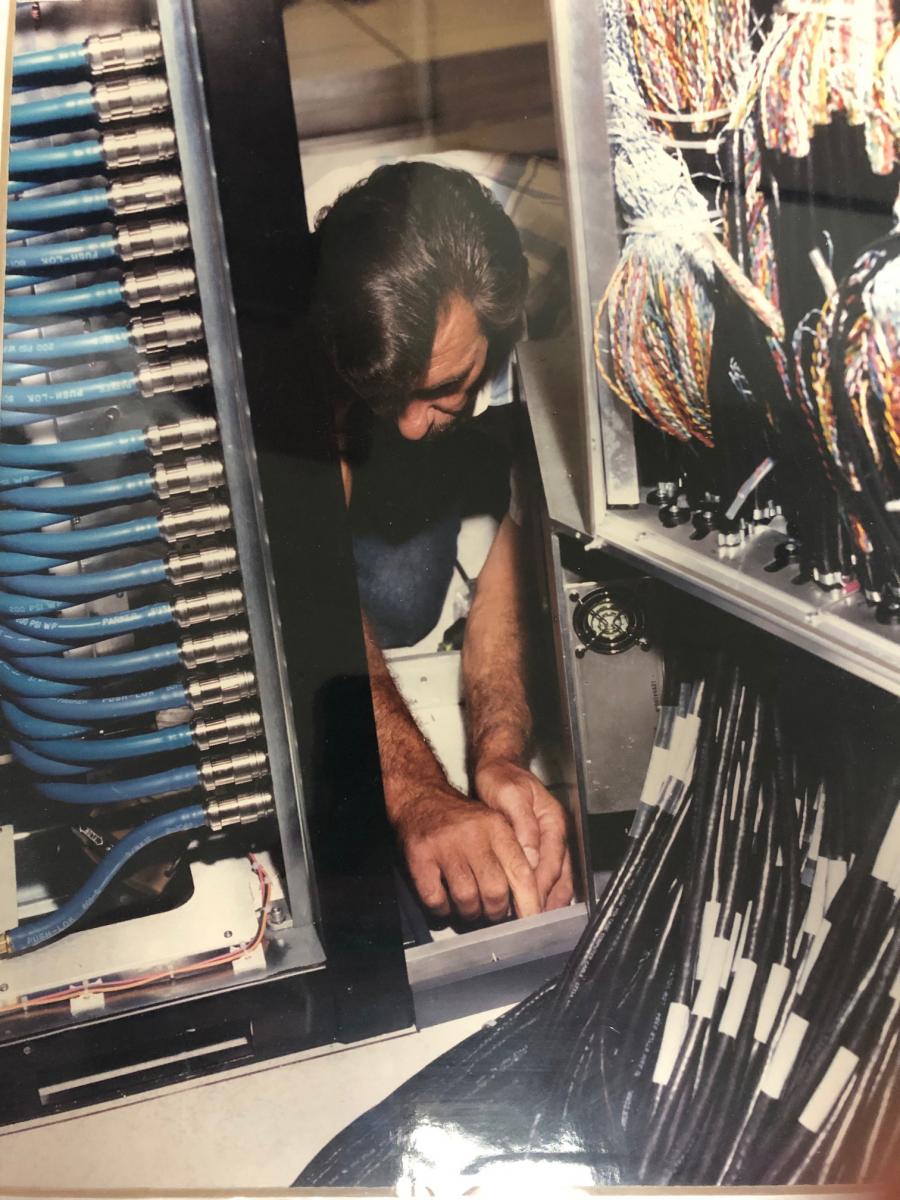

Earlier supercomputers, including the first ones deployed at OSC, were cooled using complete liquid submersion. That’s right, it’s against everything you know: electrical computer components touching, much less dipped in, liquid. But we’re not talking water here. Enter Fluorinert™, a non-electrically conductive cooling liquid created by 3M. Have you ever experienced how quickly you can cool down on a hot day by jumping into a swimming pool? Liquid is preferable to air cooling because the heat capacity of water is four times that of air. This means water, or Fluorinert in this case, is better at carrying heat. In the case of a supercomputer, this translates into carrying heat away from a system.

The move to air cooling

As the architecture of the crude older systems made way for more modern designs, it became possible to cool systems with a readily available, free resource: air. When moving to commodity clusters that took up more space and hence were less densely packed, it made sense to use fans to air cool the newer systems. Fans in the servers move masses of air past heat sinks to cool the system to an ideal operating temperature. This means the ambient air has to be cool, which also means cooling the room itself with air conditioning — the same room where the now-heated air is being expelled from the computer racks. Not exactly energy efficient.

“The cruder early technology required [liquid cooling], and it was a much more heroic cooling system in some ways in that it wasn’t just the processors, it was other components like voltage regulation, modules and other pieces in the memory that required that extreme cooling,” Johnson said. “It was a measure of how inefficient the underlying circuit devices and other components were. And so it was a requirement. When we started using air-cooled systems it was a characteristic of the architecture that it could be air cooled. That didn’t mean it was easy, and in some cases, density was causing us to look at more extreme methods of cooling.”

As systems become more powerful, they require both cooler rooms and more air velocity running past the servers,both of which cost money, time, and floor space.

The return of liquid cooling

Today’s supercomputers are more powerful than ever, and thus require a lot more power. When OSC started looking at its next cluster, Johnson knew it was time to make the jump to liquid cooling. The group made a soft jump with the 2016 Owens system with liquid cooling assistance through radiator doors. While the Owens system takes about the same amount of power as Pitzer for each rack, each processor in Pitzer requires more power than the processors in Owens. Since potential for heat dissipation goes up in a nonlinear way for moving air, the choice to move to liquid cooling was simple. And another positive—technology has improved a bit in the last 30 years.

“What we have today is slightly different in that we do have liquid that goes into the computer itself, but it doesn’t interface directly with the electrical components, fully submerging them,” Johnson said. “It uses a cold plate instead, so it replaces the traditional heat sink on the computer processors.”

Another plus? It’s a warm-water system. Since computer chips are happy running at approximately 150 degrees Fahrenheit, it doesn’t take extremely cool water to bring the temperature down. The CoolIT Systems reliable Direct Liquid Cooling system runs the water at a range of 55-85 degrees Fahrenheit, meaning the water itself does not have to be cooled, even in the middle of hot, humid Ohio summers. Water has a much higher heat capacity to carry energy away from the computer. To compare directly, both Pitzer and Owens use about 28-30 KW per rack. Pitzer uses approximately 16 ounces of water per minute to cool each node. Owens uses 41 cubic feet of air per minute per rack.

It is worth noting that Pitzer DOES still include some air cooling for memory and other system parts that aren’t the processors. But thanks to the power disappated by the liquid system, the fans can run at a much lower speed to achieve the cooling necessary for these parts.

“The bottom line is we’re not running a refrigeration cycle. That heat is not being dumped into the air, it doesn’t have to be cooled by an A/C that requires more power, and then we can also run the fans inside the computer at a lower speed, and fans can account for up to 10 percent of the power use in a server, so if we’re saving (a net) five percent there, that’s a nontrivial savings,” Johnson said.

“Water is the future.”

“Pitzer is kind of at an inflection point,” Johnson said. “Each processor in Pitzer requires more power than each of the processors in Owens. And the next generation of chips beyond Pitzer are just going up. And so, they’re getting to the point where the amount of heat that each processor will generate … it just won’t be possible to air cool it… Some road maps show 400W processors.”

It appears denser is better, and not just for liquid cooling purposes. The reason the first supercomputers were often arranged with tightly quartered racks so the networking cables didn’t have to reach so far. The same rings true today: less floor space and closer racks mean engineers can use copper network cables instead of fiber optic, saving a little money.

“Water is the future,” Johnson said. “We were in a window of time where things could be air cooled for large HPC installations, but we’re getting back to water or some other more efficient medium for heat exchange.”

Russ Pitzer was working on the cutting edge of technology in 1987 when he and his colleagues envisioned and established the Ohio Supercomputer Center. He is pleased to know that his name and likeness adorns the endcap of a new cluster that represents another important step forward in supercomputing.